Certain critics of rigid genetic determinism have long believed that the environment plays a major role in shaping intelligence. According to this view, enriched and stimulating surroundings should make one smarter. Playing Bach violin concertos to your fetus, for example, may nudge it toward future feats of fiddling, ingenious engineering, or novel acts of fiction. Although this view has been challenged, it persists in the minds of romantics and parents–two otherwise almost non-overlapping populations.

If environmental richness were actually correlated with intelligence, then those who live and work in the richest environments should be measurably smarter than those not so privileged. And what environment could be richer than the laboratory? Science is less a profession than a society within our society–a meritocracy based on an economy of ideas. Scientists inhabit a world in which knowledge accretes and credit accrues inexorably, as induction, peer review, and venture capital fuel the engines of discovery and innovation. Science has become the pre-eminent intellectual enterprise of our time–and American science proudly leads the world. The American biomedical laboratory is to the 21st century what the German university was to the 19th; what Dutch painting was to the 17th; the Portuguese sailing ship to the 16th; the Greek Lyceum to the minus 5th.

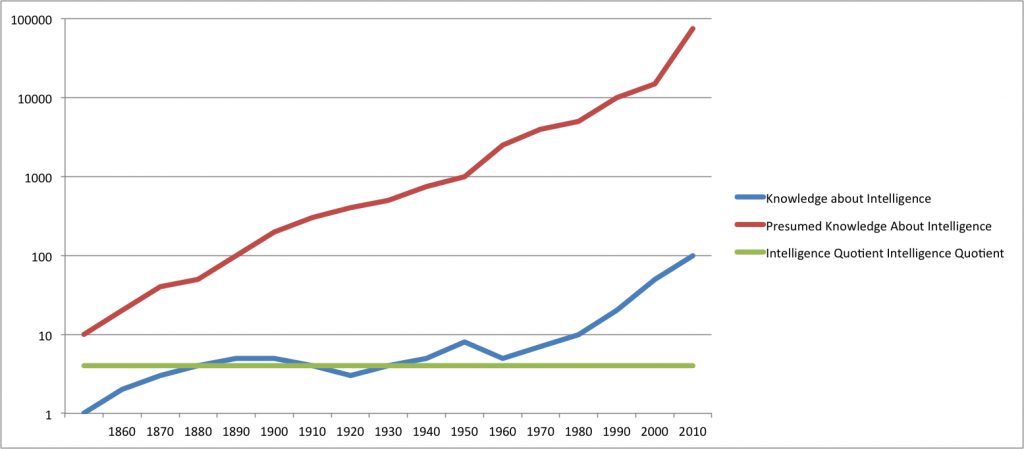

According to this view, then, scientists should be getting smarter. One might measure this in various ways, but Genotopia, being quantitatively challenged, prefers the more qualitative and subjective measure of whether we are making the same dumb mistakes over and over. So we are asking today: Are scientists repeating past errors and thus sustaining and perhaps compounding errors of ignorance? Are scientists getting smarter?

Yes and no. A pair of articles (1, 2) recently published in the distinguished journal Trends in Genetics slaps a big juicy data point on the graph of scientific intelligence vs. time–and Senator, the trend in genetics is flat. The articles’ author, Gerald Crabtree, examines recent data on the genetics of intelligence. He estimates that, of the 20,000 or so human genes, between 2,000 and 5,000 are involved in intelligence. This, he argues, makes human intelligence surprisingly “fragile.” In a bit of handwaving so vigorous it calls to mind the semaphore version of Wuthering Heights, he asserts that these genes are strung like links in a chain, rather than multiply connected, as nodes of a network. He imagines the genes for intelligence to function like a biochemical pathway, such that any mutation propagates “downstream”, diminishing the final product–the individual’s god-given and apparently irremediable brainpower.

Beginning in 1865, the polymath Francis Galton fretted that Englishmen were getting dumber. In his Hereditary Genius (1865) he concluded that “families are apt to become extinct in proportion to their dignity” (p. 140). He believed that “social agencies of an ordinary character, whose influences are little suspected, are at this moment working towards the degradation of human nature,” although he acknowledged that others were working toward its improvement. (1) The former clearly outweighed the latter in the mind of Galton and other Victorians; hence Galton’s “eugenics,” an ingenious scheme for human improvement through the machinations of “existing law and sentiment.” Galton’s eugenics was a system of incentives and penalties for marriage and childbirth, meted out according to his calculations of social worth.This is a familiar argument to students of heredity. The idea that humans are degenerating–especially intellectually–persists independently of how much we know about intelligence and heredity. Which is to say, no matter how smart we get, we persist in believing we are getting dumber.

Galton was just one exponent of the so-called degeneration theory: the counter-intuitive but apparently irresistible idea that technological progress, medical advance, improvements in pedagogy, and civilization en masse in fact are producing the very opposite of what we supposed; namely, they are crippling the body, starving the spirit, and most of all eroding the mind.

The invention of intelligence testing by Alfred Binet just before the turn of the 20th century provided a powerful tool for proving the absurd. Though developed as a diagnostic to identify children who needed a bit of extra help in school–an enriched environment–IQ testing was quickly turned into a fire alarm for degeneration theorists. When the psychologist Robert M. Yerkes administered a version of the test to Army recruits during the first world war, he concluded that better than one in eight of America’s Finest were feebleminded–an inference that is either ridiculous or self-evident, depending on one’s view of the military.

These new ways of quantifying intelligence dovetailed perfectly with the new Mendelian genetics, which was developed beginning in 1900. Eugenics—a rather thin, anemic, blue-blooded affair in Victorian England, matured in Mendelian America into a strapping and cocky young buck, with advocates across the various social and political spectra embracing the notion of hereditary improvement. Eugenics advocates of the Progressive era tended to be intellectual determinists. Feeblemindedness–a catch-all for subnormal intelligence, from the drooling “idiot” to the high-functioning “moron”—was their greatest nightmare. It seemed to be the root of all social problems, from poverty to prostitution to ill health.

And the roots of intelligence were believed to be genetic. In England, Cyril Burt found that Spearman’s g (for “general intelligence”)—a statistical “thing,” derived by factor analysis and believed by Spearman, Burt, and others to be what IQ measures—was fixed and immutable, and (spoiler alert) poor kids were innately stupider than rich kids. In America, the psychologist Henry Goddard, superintendent of the Vineland School for the Feebleminded in New Jersey and the man who had introduced IQ testing to the US, published Feeblemindedness: Its Causes and Consequences in 1914. Synthesizing years of observations and testing of slow children, he suggested–counter to all common sense–that feeblemindedness was due to a single Mendelian recessive gene. This observation was horrifying, because it made intelligence so vulnerable–so “fragile.” A single mutation could turn a normal individual into a feebleminded menace to society.

As Goddard put it in 1920, “The chief determiner of human conduct is the unitary mental process which we call intelligence.” The grade of intelligence for each individual, he said, “is determined by the kind of chromosomes that come together with the union of the germ cells.” Siding with Burt, the experienced psychologist wrote that intelligence was “conditioned by a nervous mechanism that is inborn, and that it was “but little affected by any later influence” other than brain injury or serious disease. He called it “illogical and inefficient” to attempt any educational system without taking this immovable native intelligence into account. (Goddard, Efficiency and Levels of Intelligence, 1920, p 1)

This idea proved so attractive that a generation of otherwise competent and level-headed reserchers and clinicians persisted in believing it, again despite it being as obvious as ever that the intellectual horsepower you put out depends on the quality of the engine parts, the regularity of the maintenance you invest in it, the training of the driver, and the instruments you use to measure it.

The geneticist Hermann Joseph Muller was not obsessed with intelligence, but he was obsessed with genetic degeneration. Trained at the knobby knees of some of the leading eugenicists of the Progressive era, Muller–a fruitfly geneticist by day and a bleeding-heart eugenicist by night–fretted through the 1920s and 1930s about environmental assaults on the gene pool: background solar radiation, radium watch-dials, shoestore X-ray machines, etc. The dropping of the atomic bombs on the Japanese sent him into orbit. In 1946 he won a Nobel prize for his discovery of X-ray-induced mutation, and he used his new fame to launch a new campaign on behalf of genetic degeneration. The presidency of the new American Society of Human Genetics became his bully pulpit, from which he preached nuclear fire and brimstone: our average “load of mutations,” he calculated, was about eight damaged genes–and growing. Crabtree’s argument thus sounds a lot like Muller grafted onto Henry Goddard.

In 1968, the educational psychologist Arthur Jensen produced a 120-page article that asserted that compensatory education–the idea that racial disparities in IQ correlate with opportunities more than innate ability, and accordingly that they can be reduced by enriching the learning environments of those who test low–was futile. Marshaling an impressive battery of data, most of which were derived from Cyril Burt, Jensen insisted that blacks are simply dumber than whites, and (with perhaps just a hint of wistfulness) that Asians are the smartest of all. Jensen may not have been a degenerationist sensu strictu, but his opposition to environmental improvement earns him a data point.

In 1990, Richard Herrnstein and Charles Murray published their infamous book, The Bell Curve. Their brick of a book was a masterly and authoritative rehash of Burt and Jensen, presented artfully on a platter of scientific reason and special pleading for the brand of reactionary politics that is reserved for those who can afford private tutors. They found no fault with either Burt’s data (debate continues, but it has been argued that Burt was a fraud) or his conclusion that IQ tests measure Spearman’s g, that g is strongly inherited, and that it is innate. Oh yes, and that intellectually, whites are a good bit smarter than blacks but slightly dumber than Asians. Since they believed there is nothing we can do about our innate intelligence, our only hope is to “marry up” and try to have smarter children.

The Bell Curve appeared just at the beginning of the Human Genome Project. By 2000 we had a “draft” reference sequence for the human genome, and by 2004 (ck) “the” human genome was declared complete. Since the 1940s, human geneticists had focused on single-gene traits, especially diseases. One problem with Progressive era eugenics, researchers argued, was that they had focused on socially determined and hopelessly complex traits; once they set their sights on more straightforward targets, the science could at last advance.

But once this low-hanging fruit had been plucked, researchers began to address more complex traits once again. Disease susceptibility, multicausal diseases such as obesity, mental disorders, and intelligence returned to the fore. Papers such as Crabtree’s are vastly more sophisticated than Goddard’s tome. The simplistic notion of a single gene for intelligence is long gone; each of Crabtree’s 2,000-5,000 hypothetical intelligence genes hypothetically contributes but a tiny fraction of the overall. If you spit in a cup and send it to the personal genome testing company 23AndMe, they will test your DNA for hundreds of genes, including one that supposedly adds 7 points to your IQ (roughly 6 percent for an IQ of 110).

Thus we are back around to a new version of genes for intelligence. Despite the sophistication and nuance of modern genomic analyses, we end up concluding once again that intelligence is mostly hereditary and therefore also racial, and that it’s declining.

Apart from the oddly repetitious and ad hoc nature of the degeneration argument, what is most disconcerting is this one staring implication: that pointing out degeneration suggests a desire to do something about it. If someone were, say, sitting on the couch and called out, “The kitchen is sure a mess! Look at the plates all stacked there, covered with the remains of breakfast, and ick, flies are starting to gather on the hunks of Jarlsberg and Black Twig apples hardening and browning, respectively, on the cutting board,” you wouldn’t think he was simply making an observation. You’d think he was implying that you should get in there and clean up the damn kitchen. Which would be a dick move, because he’s just sitting there reading the Times, so why the heck doesn’t he do it himself. But the point is, sometimes observation implies action. If you are going to point out that the genome is broken, you must be thinking on some level that we can fix it. Thus, degeneration implies eugenics. Not necessarily the ugly kind of eugenics of coercive sterilization laws and racial extermination. But eugenics in Galton’s original sense of voluntary human hereditary improvement.

And thus, scientists do not appear to be getting any smarter. Despite the enriched environs of the modern biomedical laboratory, with gleaming toys and stimulating colleagues publishing a rich literature that has dismantled the simplistic genetic models and eugenic prejudices of yore, researchers such as Crabtree continue to believe the same old same old: that we’re getting dumber–or in danger of doing so.

In other words, sometimes the data don’t seem to matter. Prejudices and preconceptions leak into the laboratory, particularly on explosive issues such as intelligence and/or race, regardless of how heredity is constructed. Plenty of scientists are plenty smart, of course. But rehashing the degeneracy theory of IQ does not demonstrate it.